##Project I: Body in Space, Spaces of the Body

**Create a work that uses digital technology to explore the human body in space.** This may be the body in relation to itself, to other bodies, to the environment and/or body movements and gesture. You can work on **Face tracking (FaceOSC), Hand tracking (Leap Motion), Body tracking (Kinect) or Distance (Arduino)**. It's also possible to propose a tool or sensor that we didn't see in class (pending approval). The project can take many forms including (but not limited to) interactive experience, responsive sound, performance or visualization.

You may work individually or in small groups (max 3 persons).

###Due Monday 15th of February:

Post a short description on the blog of your idea with a sketch(s). We will discuss on your idea in class.

###Due Monday 7th of March:

- Presentation of final work in class

- Documentation on the blog

- Concept: Present a clear and concise overview of the project concept; What was your idea? What motivated or inspired you?

- Research/Process: What other projects are related to yours? What other artists or works inspired you? What tools or software did you use? Provide sketches or prototypes of the development process.

- Results: Video and images of the final outcome.

- Code references: Link to code sources or examples you used extensively in the project.

- Code zipped and deliver via email

Hand Tracking

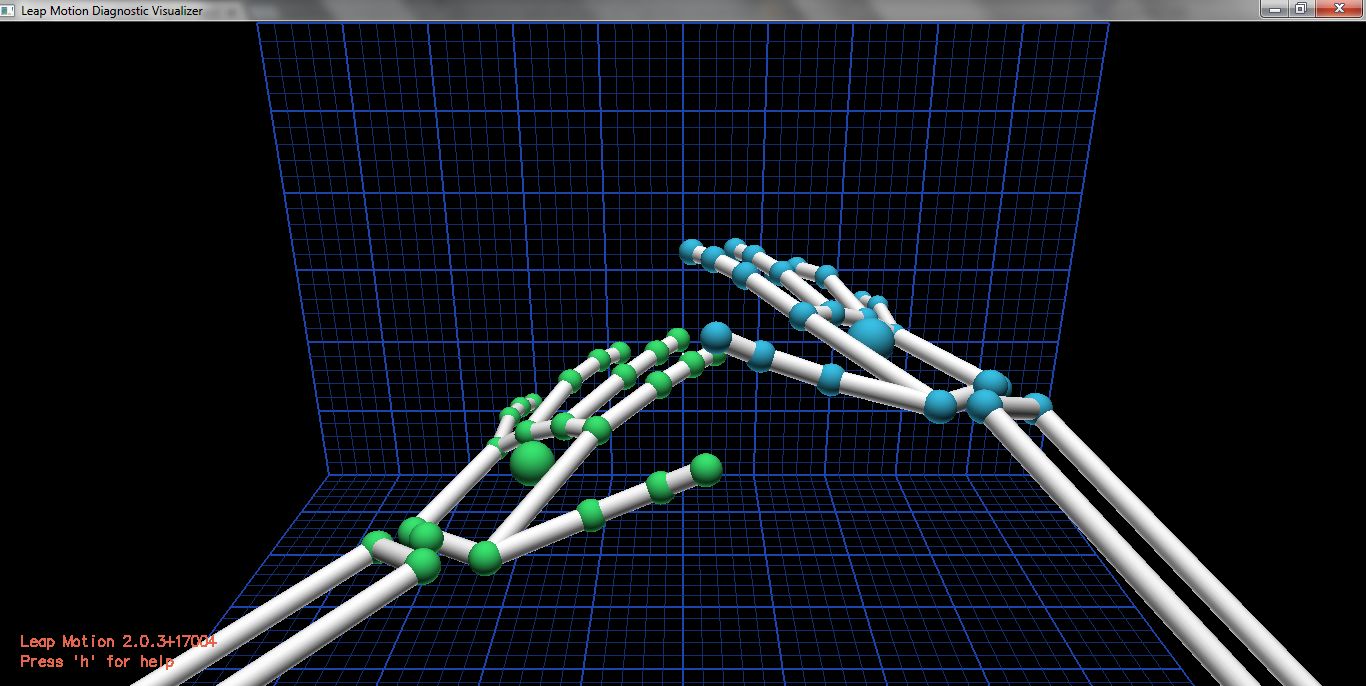

##Introduction to the Leap Motion

The Leap Motion Controller tracks your hands at up to 200 frames per second using infrared cameras – giving you a 150° field of view with roughly 8 cubic feet of interactive 3D space.

Leap Motion website: https://www.leapmotion.com/

Leap Motion installation: https://developer.leapmotion.com/downloads

###Examples:

- The Manual Input Sessions: http://www.flong.com/projects/mis/

- Contact tangible audio interface: https://vimeo.com/82107250

- Augmented Hand Series: http://www.flong.com/projects/augmented-hand-series/

- Human Electro: http://humanelectro.net/Leap

- Theremin: https://vimeo.com/114767889

- Leap Motion + Pepper's Ghost Technique: https://vimeo.com/71216887 (pepper's Ghost Technique)

- Leap Motion Ball Maze: https://www.youtube.com/watch?v=I_-UpOYULxw

### Leap Motion API documentation

####Controller

The Controller class is our interface to the device. You can create an instance of the Controller and poll it for access to frames.

####Frame

A Frame is a set of hand and pointable tracking data and any live gestures from a moment in time.

####Hand

All the information the frame has about a detected hand. This includes the palm position and velocity, information about a sphere that would fit in the hand and a list of the hand’s fingers. You can get the id of a Hand so that you can refer to the same Hand in a later frame.

####Arms

An Arm is a bone-like object that provides the orientation, length, width, and end points of an arm. When the elbow is not in view, the Leap Motion controller estimates its position based on past observations as well as typical human proportion.

### Leap Motion API documentation

####Pointable

A Pointable can be a finger or a tool. The Leap Motion Controller tries to detect “tools” by the fact that a pen or pencil is thinner, straighter and longer than a finger. Pointables have an id, the same as Hands do, as well as a direction and the position and velocity of the tip.

####Fingers

The Leap Motion controller provides information about each finger on a hand. If all or part of a finger is not visible, the finger characteristics are estimated based on recent observations and the anatomical model of the hand. Fingers are identified by type name, i.e. thumb, index, middle, ring, and pinky.

####Gesture

There are a number of in built gestures that the device recognises and this is the super class. When a gesture is detected, it is added to the frame alongside the hand and pointable data. The subclasses are CircleGesture, SwipeGesture, KeyTapGesture and ScreenTapGesture.

**API Documentation Link**

https://developer.leapmotion.com/documentation/java/devguide/Leap_Overview.html

### Leap Motion and Processing

- Github repository: https://github.com/nok/leap-motion-processing

- Download the library and install it in Processing (you need the Leap Motion software installed)

- Examples from the repository: https://github.com/nok/leap-motion-processing/tree/master/examples

### Leap Motion and Javascript

- Leapjs library: https://github.com/leapmotion/leapjs

- Examples: https://developer.leapmotion.com/gallery/category/example/tags/javascript/order/popularity

- Documentation: https://developer.leapmotion.com/documentation/javascript/index.html

###Due for next course

*Choose one of the following:*

####Short Assignment 1: Body-sensing technology research

Research a technology or sensor that can be used to extend, augment or sense the human body (it must be something we did not discuss in class). Post your findings on the class blog with a description of the technology and why you chose it. Include links and assess how accessible using the tool or sensor is (commercially available? diy? etc).

– OR –

####Short Assignment 2: Leap Motion

Create an interactive script with Leap Motion (in Processing or in Javascript).

You can create from examples or from scratch.

##Introduction to the Leap Motion

The Leap Motion Controller tracks your hands at up to 200 frames per second using infrared cameras – giving you a 150° field of view with roughly 8 cubic feet of interactive 3D space.

Leap Motion website: https://www.leapmotion.com/

Leap Motion installation: https://developer.leapmotion.com/downloads

###Examples:

- The Manual Input Sessions: http://www.flong.com/projects/mis/

- Contact tangible audio interface: https://vimeo.com/82107250

- Augmented Hand Series: http://www.flong.com/projects/augmented-hand-series/

- Human Electro: http://humanelectro.net/Leap

- Theremin: https://vimeo.com/114767889

- Leap Motion + Pepper's Ghost Technique: https://vimeo.com/71216887 (pepper's Ghost Technique)

- Leap Motion Ball Maze: https://www.youtube.com/watch?v=I_-UpOYULxw

### Leap Motion API documentation

####Controller

The Controller class is our interface to the device. You can create an instance of the Controller and poll it for access to frames.

####Frame

A Frame is a set of hand and pointable tracking data and any live gestures from a moment in time.

####Hand

All the information the frame has about a detected hand. This includes the palm position and velocity, information about a sphere that would fit in the hand and a list of the hand’s fingers. You can get the id of a Hand so that you can refer to the same Hand in a later frame.

####Arms

An Arm is a bone-like object that provides the orientation, length, width, and end points of an arm. When the elbow is not in view, the Leap Motion controller estimates its position based on past observations as well as typical human proportion.

### Leap Motion API documentation

####Pointable

A Pointable can be a finger or a tool. The Leap Motion Controller tries to detect “tools” by the fact that a pen or pencil is thinner, straighter and longer than a finger. Pointables have an id, the same as Hands do, as well as a direction and the position and velocity of the tip.

####Fingers

The Leap Motion controller provides information about each finger on a hand. If all or part of a finger is not visible, the finger characteristics are estimated based on recent observations and the anatomical model of the hand. Fingers are identified by type name, i.e. thumb, index, middle, ring, and pinky.

####Gesture

There are a number of in built gestures that the device recognises and this is the super class. When a gesture is detected, it is added to the frame alongside the hand and pointable data. The subclasses are CircleGesture, SwipeGesture, KeyTapGesture and ScreenTapGesture.

**API Documentation Link**

https://developer.leapmotion.com/documentation/java/devguide/Leap_Overview.html

### Leap Motion and Processing

- Github repository: https://github.com/nok/leap-motion-processing

- Download the library and install it in Processing (you need the Leap Motion software installed)

- Examples from the repository: https://github.com/nok/leap-motion-processing/tree/master/examples

### Leap Motion and Javascript

- Leapjs library: https://github.com/leapmotion/leapjs

- Examples: https://developer.leapmotion.com/gallery/category/example/tags/javascript/order/popularity

- Documentation: https://developer.leapmotion.com/documentation/javascript/index.html

###Due for next course

*Choose one of the following:*

####Short Assignment 1: Body-sensing technology research

Research a technology or sensor that can be used to extend, augment or sense the human body (it must be something we did not discuss in class). Post your findings on the class blog with a description of the technology and why you chose it. Include links and assess how accessible using the tool or sensor is (commercially available? diy? etc).

– OR –

####Short Assignment 2: Leap Motion

Create an interactive script with Leap Motion (in Processing or in Javascript).

You can create from examples or from scratch.